One of the things we love about attending AWS re:Invent is learning about the latest tools from Amazon that will help us build better software. We came back from Vegas excited about several new releases that have the potential to contribute to our data discovery work.

1. Amazon Kendra

Delivering high-quality search results is a perennial problem. Too often users struggle to find the information they need in a timely fashion. Amazon Kendra promises to change this. It is a machine-learning powered search tool that connects to your enterprise data repositories and automatically indexes and categorizes your data. Kendra can search data stored in SQL databases, documents on Sharepoint, and PDFs in S3.

Once Kendra learns about your documents, you can search for just about anything using natural language. Kendra can answer questions like “How much maternity leave do I get?” or “What is the process for connecting to our VPN?” Kendra is able to identify and pull relevant answers to queries from within documents. Users have the ability to provide feedback on the quality of search results, so Kendra will be able to learn and improve over time.

2. Amazon EC2 Inf1

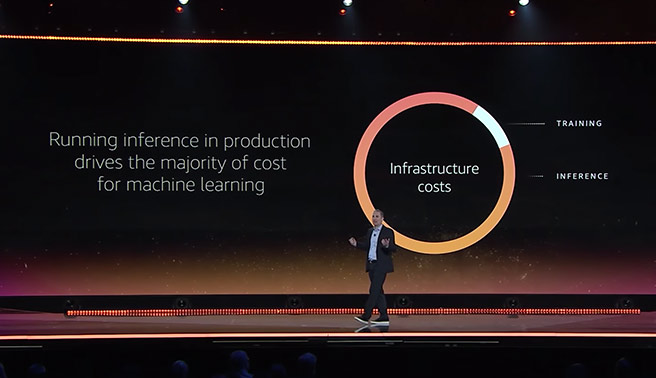

During re:Invent, AWS announced the availability of Amazon EC2 Inf1 machine learning instances using Amazon’s custom-built Inferentia chip, specialized for handling processor-intensive inference work. We had the opportunity to test drive the Inf1 instances on ML projects to see the performance improvements for ourselves, and we’re excited to apply them to our data discovery work to speed up large-scale ML applications like speech recognition, object recognition, and natural language processing!

During re:Invent, AWS announced the availability of Amazon EC2 Inf1 machine learning instances using Amazon’s custom-built Inferentia chip, specialized for handling processor-intensive inference work. We had the opportunity to test drive the Inf1 instances on ML projects to see the performance improvements for ourselves, and we’re excited to apply them to our data discovery work to speed up large-scale ML applications like speech recognition, object recognition, and natural language processing!

The Inferentia chips have a unique core, with dedicated RAM and cache, that allow them to speed up calculations by 3x compared to existing technology. The Inf1 instances also provide cost savings of up to 40% per inference!

3. Generative Adversarial Networks (GANs)

AWS continues to be an industry innovator in the area of data discovery, particularly in the ways it prototypes machine learning use cases. In the past, AWS has demonstrated the power of deep learning and supervised learning through DeepLens and DeepRacer. At re:Invent2019, AWS exposed the potential of generative adversarial networks (GANs) through its latest project, DeepComposer. Although DeepComposer is a  simple “make-your-own-music” tool, it showcases the benefit of a “generator-discriminator” model when asking machines to be more creative. In this model, the generative network creates candidates that the discriminative network evaluates. The generative network tries to “fool” the discriminative network by producing new candidates that it cannot recognize as artificial.

simple “make-your-own-music” tool, it showcases the benefit of a “generator-discriminator” model when asking machines to be more creative. In this model, the generative network creates candidates that the discriminative network evaluates. The generative network tries to “fool” the discriminative network by producing new candidates that it cannot recognize as artificial.

As the data in our world grows more complex, providing enhancements and analytics on that data will require machines to “create” rather than just “verify.” GANs may be a powerful tool in the future of data discovery, and the introduction of DeepComposer shows AWS’s continual desire to make the AI/ML world practical and digestible.

—

Like What You Hear? Work Here!

Are you a software engineer, interested in joining a software company that invests in its teammates and promotes a strong engineering culture? Then you’re in the right place! Check out our current Career Opportunities on Life @ BTI360. We’re always looking for like-minded engineers to join the BTI360 family.

Other Posts from re:Invent 2019

Exploring What’s Next for AWS Ground Truth at re:Invent